Stop and read! Are you prepared for an eight fold increase in network bandwidth over the next 5 years?

Traditionally software has been deployed within company premises using the client-server model. This model has been employed extensively. In fact it would be difficult to find any business without such an infrastructure in place. Whenever at least two workers worked simultaneously on the same data the client-server model was employed.

Typically, a computer or a number of computers would act as the server. Computers used by workers called clients connected to the server when needed through a local area network or a wide area network. Take a typical supermarket or your corner grocery store for example with three cashiers behind point-of-sale terminals. A local area network would connect not only the three computers used by the cashiers to the server but also computers used by the manager and accountant as well. The server would typically host the database with the business logic shared by client software as well as server side applications.

Over the past 10 years a great wind of change has been blowing in the software industry. The client-server model is being supplanted increasingly by the cloud-mobile model. What does this mean? Instead of resorting to servers hosted within company premises, distant data centers would host the server; this we call the cloud. Data isn’t the only thing moving to the cloud. Client applications as well as the business logic is also moving to the cloud. What is then serving as the client? Computers, be it desktops, laptops, tablets or phones are serving as the client. Browsers and mobile apps are serving as client applications.

The change is happening for several reasons. Proponents say this new model is convenient. The

prospect of getting a whole system up and running within hours simply by subscribing with a credit or debit card appeals to many businesses. No need to grapple with the technical difficulties that the installation of client-server systems present. Many software developers are also finding this model appealing because they believe it would reduce software piracy. Businesses need a reliable and efficient telecommunication infrastructure to make this transition.

Telecommunications today are advanced. We have indeed come a long way. In medieval times, the homing pigeon was one’s best bet to getting a piece of message home the fastest way possible. The homing pigeon was a bird that always found its way home whenever sent in cage thousands of kilometers away from its nest. The bird’s average flying speed is 80 km/h. This property of the bird was used to send messages. Written messages were tied to the birds which were then read when the bird got back to its nest located within a town or village. In Ancient Rome, Frontinus said that Julius Caesar used pigeons as messengers in his conquest of Gaul. The Greeks also conveyed the names of the victors at the Olympic Games to various cities using homing pigeons.

In West Africa messages were sent over several kilometers using talking drums (called locally Atumpan). Trained drummers and interpreters transmitted messages at night. Messages drummed at night were correctly interpreted in towns some kilometers away by trained interpreters who then relayed the messages to other towns after some minutes. In this manner messages were relayed over long distances through the towns in between.

In 1792, Claude Chappe, a French engineer, built the first fixed visual telegraphy system (or semaphore line) between Lille and Paris. However semaphore suffered from the need for skilled operators and expensive towers at intervals of ten to thirty kilometres (six to nineteen miles). On July 25, 1837 the first commercial electrical telegraph was demonstrated by William Fothergill Cooke, an English inventor, and Charles Wheastone, an English scientist. Samuel Morse independently developed a version of the electrical telegraph that he unsuccessfully demonstrated on 2 September 1837.

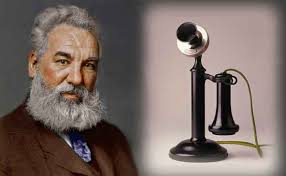

His code was an important advance over Wheatstone’s signaling method. The conventional telephone was invented independently by Alexander Bell and Elisha Gray in 1876. By 1901 Italian Inventor, Guglielmo Marconi, had demonstrated that radio waves could be transmitted across the atlantic.

In the 1960s, researchers started to investigate packet switching, a technology that sends a message in portions to its destination asynchronously without passing it through a centralized mainframe. A four-node network emerged on 5 December 1969, constituting the beginnings of the ARPANET, which by 1981 had grown to 213 nodes. ARPANET eventually merged with other networks to form the Internet.

Today we harness the speed of light, 300,000 km/s, to communicate receiving feedback instantly. Jun-ichi Nishizawa, a Japanese scientist at Tohoku University, proposed the use of optical fibers for communications in 1963. After a period of research starting from 1975, the first commercial fiber-optic communications system was developed, which operated at a wavelength around 0.8 µm and used GaAs semiconductor lasers. This first-generation system operated at a bit rate of 45 Mbit/s with repeater spacing of up to 10 km. Soon on 22 April 1977, General Telephone and Electronics sent the first live telephone traffic through fiber optics at a 6 Mbit/s throughput in Long Beach, California.

The internet today is faster by far. Fiber optic cables have increased the available bandwidth tremendously but is the existing infrastructure sufficient for this transition.

No, in many places. When running cloud software every interaction with the application would lead to data being transmitted between the server and your computer. This could be as small as 100 KB or several megabytes. Bear in mind that one megabyte is equal to eight megabits. Imagine all businesses switching to cloud software hosted at distant datacenters. Clearly with the current infrastructure in many places this would lead to slow network making cloud software unusable at peak periods. Luckily the switch is going to be rapid but not instant giving countries plenty of time to make major infrastructural changes.

It is estimated that in the next 5 years bandwidth usage would increase 8 fold. Governments and telecom companies must make the necessary investments to ensure that cloud-mobile applications

thrive. This investment is also necessary to ensure that my internet speed, your internet speed remain as fast as it is today. Think of the internet as a pipe. If the data being transmitted per second is bigger than the pipe then it would be slow as some data would have to wait. To make the switch to the cloud-mobile software model businesses and governments would need a fast efficient network with large bandwidth and low latency. Many businesses would conduct a cost-benefit appraisal before making the switch. Needless to say that bandwidth must be affordable as well.

The second major concern which ought to be addressed is security. Cyber security in general would need improvement. Security must be improved not only at datacenters but along the networks. Several things immediately come to mind. The first is encryption. Browsers, apps and encryption technologies like OpenSSL must be designed to thwart attempts aimed at sabotaging the RSA, DSA public key authentication mechanism. Given the md5 and sha1 hashes or the RSA public keys of SSL certificates it should be impossible for any one to intercept network traffic as long as browsers display the same data. If someone intercepts the data then the problem can be attributed to one or all of two things. Either the private key of the SSL certificate has been compromised or the browser failed to verify the SSL certificate.

Another step that must be taken to enhance security is improved oversight of internet service providers or telecom companies. Anyone with unauthorized network access can cause a number of problems ranging from man-in-the-middle attacks to session hijacking. It is important that the necessary rules and procedures are put in place to prevent this problem.

It would be preposterous and totally ludicrous for anybody to say that businesses and organizations

harbor no apprehension, trepidation at the thought of their business secrets and most confidential data residing on distant servers. The need for improved security at datacenters cannot be overemphasized. This is necessary to ensure that current and prospective users of cloud software are reassured and their fears of a possible data breach assuaged. Luckily several laws have been enacted in countries such as the United States to ensure that data breaches are prevented. In today’s world of cooperating and collaborating teams it is imperative that the best network practices are adhered to in underdeveloped countries.

Malware on computers is another security concern that must be given attention by businesses and other organizations. Multi factor authentication makes it difficult for unauthorized personnel and even magicians to access cloud accounts. Every effort must be made to incorporate these in design principles.

This should help you in no small way when deciding between cloud-mobile apps and client-server systems.

Boachsoft LowRider 2017 is an excellent work order management software.

Boachsoft also makes excellent personal finance software as well as repair shop management software

Credit: Yaw Boakye-Yiadom. Copyright (c) 2017 Yaw Boakye-Yiadom and Boachsoft.

873 total views, 2 views today